YOLOv5 is a family of object detection architectures and models pretrained on the COCO dataset, and represents Ultralytics open-source research into future vision AI methods, incorporating lessons learned and best practices evolved over thousands of hours of research and development.

YOLO an acronym for 'You only look once', is an object detection algorithm that divides images into a grid system. Each cell in the grid is responsible for detecting objects within itself.

YOLO is one of the most famous object detection algorithms due to its speed and accuracy.

|

| Image source: https://pytorch.org/hub/ultralytics_yolov5/ |

Table of Contents:

- Prerequisite

- Install Dependencies

- Load YOLOv5 model from PyTorch Hub

- Detect and Recognize Objects

- Object Bounding Box Coordinates Prediction

- Complete Example

Prerequisites

Python>=3.8

PyTorch>=1.7

To install PyTorch see https://pytorch.org/get-started/locally/.Install Dependencies

Install YOLOv5 dependencies using following pip command.

pip install -qr https://raw.githubusercontent.com/ultralytics/yolov5/master/requirements.txt

Load YOLOv5 model from PyTorch Hub

There are four YOLOv5 models available on PyTorch Hub. Load any model using following command.

import torch# Load Modelmodel = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True)

You may get the following when model is successfully loaded.

Fusing layers...Model Summary: 224 layers, 7266973 parameters, 0 gradientsAdding AutoShape...

|

| Image source: https://pytorch.org/hub/ultralytics_yolov5/ |

Detect and Recognize Objects

We have loaded the YOLOv5 model. We use this model to detect and recognize objects in the image/s.

Input: URL, Filename, OpenCV, PIL, Numpy or PyTorch inputs

Output: Return detections in torch, pandas, and JSON output formats

We can print the model output, we can save the output.

filename: imgs = 'data/images/zidane.jpg'URI: imgs = 'https://github.com/ultralytics/yolov5/releases/download/v1.0/zidane.jpg'OpenCV: imgs = cv2.imread('image.jpg')[:,:,::-1] # HWC BGR to RGB x(640,1280,3)PIL: imgs = Image.open('image.jpg') # HWC x(640,1280,3)numpy: imgs = np.zeros((640,1280,3)) # HWCtorch: imgs = torch.zeros(16,3,320,640) # BCHW (scaled to size=640, 0-1 values)multiple: imgs = [Image.open('image1.jpg'), Image.open('image2.jpg'), ...] # list of images

Now take the zidane.jpg image from ultralytics.com and find and save the object detection results.

# Take image/simgs = ['https://ultralytics.com/images/zidane.jpg'] # batch of images# Find the detection resultsresults = model(imgs)# save the resultresults.save()

results.save() create a directory runs\detect\exp and save the image/s in it.

Below is the saved image after object detection.

|

| 2 person and 2 ties are detected |

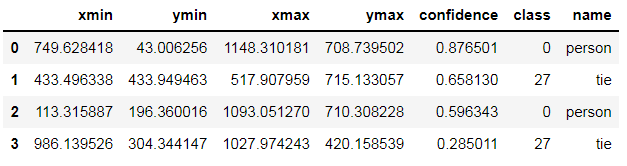

Object Bounding Box Coordinates Prediction

The bounding box coordinates of the objects detected can be predicted as tensor using .xyxy and as pandas data frame using .pandas().xyxy.

results.xyxy[0]

Output:

tensor([[7.49628e+02, 4.30063e+01, 1.14831e+03, 7.08740e+02, 8.76501e-01, 0.00000e+00],[4.33496e+02, 4.33949e+02, 5.17908e+02, 7.15133e+02, 6.58130e-01, 2.70000e+01],[1.13316e+02, 1.96360e+02, 1.09305e+03, 7.10308e+02, 5.96343e-01, 0.00000e+00],[9.86140e+02, 3.04344e+02, 1.02797e+03, 4.20159e+02, 2.85011e-01, 2.70000e+01]])

The results in pandas data frame format.

results.pandas().xyxy[0]

|

| detected objects results in pandas format |

Complete Example

See the bellow complete example for the model loading, detecting objects and recognize them, and predicting the object bounding box coordinates in tensor or pandas format.

import torch# Load the modelmodel = torch.hub.load('ultralytics/yolov5', 'yolov5s', pretrained=True)# Imagesimgs = ['https://ultralytics.com/images/zidane.jpg'] # batch of images# Inference the detection resultresults = model(imgs)# Resultsresults.print() #results.save() or # results.show()results.xyxy[0] # img1 predictions (tensor)results.pandas().xyxy[0] # img1 predictions (pandas)

Comments

Post a Comment